Batching

Fortunately, this is a common problem, and JDBC provides a way to batch multiple SQL statements into a single call. Here it is the code (we skip the parts that are not changed):

int batchCount = 0;

Loan loan;

while ((loan = csv.read()) != null) {

setParams(prepStmt, loan);

prepStmt.addBatch();

if (++batchCount == BATCH_SIZE) {

prepStmt.executeBatch();

connection.commit();

batchCount = 0;

stats.send(BATCH_SIZE);

}

}

if (batchCount != 0) {

prepStmt.executeBatch();

connection.commit();

stats.send(batchCount);

}For each insert, we do an addBatch. When the number of inserts added to the batch

reaches the BATCH_SIZE, then we execute the batch and commit the transaction,

and then reset the batch counter.

It is important not to forget to commit the transaction, after executing the batch,

since otherwise the changes remain in memory of the query engine and for very large batches

one can run out of memory, or in any case, one it is using memory unnecessarily that could

be used for caching blocks and making for efficient the access for other queries.

Since the number of rows might not be an exact multiple of the batch size,

we check after the loop if there is any outstanding batch and if so, execute it.

Regarding what is the optimal batch size, it mainly depends on the size of each row. A batch size of 1000 is a good starting point. You can from there experiment with larger and smaller sizes to find the optimal point.

Problem:

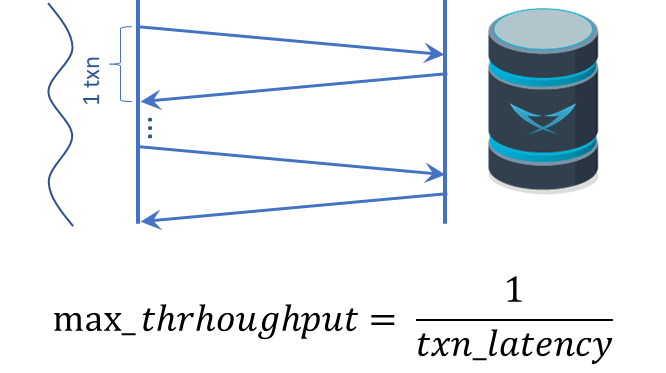

But wait, is that enough? Not really. Our application has a single thread. This single thread is capable of injecting a load at a certain rate, but if we have a distributed database like LeanXcale, we will not really be able to use its full potential. Each client thread will use at most one storage server that only uses a single core of the database server, so if your database server has more than one core (sum of all cores across all nodes used to run the database), say n cores, you will use at most 1 of n cores. Note that when you send the request to the database server, the database server is completely idle, and the same is true after the database server responds to your request.

Assume that a single transaction (our batch insert) takes 10ms to execute. This means that we can do a maximum of 1000ms/10ms=100 transactions per second, even if the database is capable of doing 1 billion transactions per second.

Solution:

The solution lies in the use of multiple threads for the ingestion of the data.